Is Improving Software Performance Now Bad?

NVidia® stock-holders seem to think so.

Introduction

This article is intended to be a, somewhat cynical, analysis of what we can discover from the market crash in NVidia’s stock price after the announcement of the DeepSeek large language model, which you can see happening on January 27th, when the stock plunged by approximately 20%.1

To help with the analysis, I make a lot of use of

The excellent book “Technofeudalism: What Killed Capitalism” by Yanis Varoufakis

Cory Doctorow's description of enshittification,

I'll try to apply the ideas from the book to the market for high-performance computing hardware, to understand what the stock crash may be about, and what that has to tell us about the motivations of hardware manufacturers, or, at least their investors. Of course, since stock-price has a major influence on senior managers’ bonuses, what investors think likely has a significant influence on the people who decide what a company does!

Cory Doctorow's description of enshittification, is also highly relevant, since this seems to be an example of why enshittification happens.

Obviously all errors in doing this, or explaining the book, are mine.

Economic Systems

Economists have invested a lot of time in analysing the different ways in which economies and markets work, and how those affect the people living in them. I am not an economist, so if you want more detail here, find some economics text-books and read them (though perhaps also maintain a cynical attitude, since many fail to consider things like Modern Monetary Theory). To understand the market in high-performance computers, I believe that these market classes are useful2.

Free Market

Here there are many suppliers each making fungible products, and there are many purchasers, so no purchaser is dependent on a single supplier, and no supplier is dependent on a single purchaser.

Monopoly

In a perfect monopoly there is only a single supplier for a given item, therefore if you need that item you have to buy it from them, and pay the price they demand. However monopolies also exist where a firm controls a significant share of the market (in the UK legislation sets this at >25%). See Wikipedia if you want a much longer discussion of monopolies.

That seems simple, but there are issues that make it more complicated.

What is the Market?

At first that seems obvious, but consider an example like this; suppose there is a fruit market in which one stall sells only apples, another only bananas, a third only plums, a fourth only strawberries, and a fifth only oranges. Is there a monopoly? If you consider the market in fruit, then each stall can claim that they are only 20% of the market, and there is no monopoly. However if you consider the market in any single fruit then there are clearly five separate monopolies, since each stall has 100% of the market for the fruit they sell.

So, is there a monopoly or not?

The analogy with the CPU market should be fairly obvious, with the instruction set architecture (ISA) taking the place of the type of fruit.

Note that in such a market it is in any stall-holder’s interest to promote their fruit, for instance by providing recipes that use it; similarly a CPU vendor has am incentive to encourage code to be written that cannot be executed on other ISAs.

Feudalism (or Fiefdom)

In a feudal system people (vassals) are also bound to a single supplier, historically of the land which they farm, and have to pay their overlord for that. However, as well as paying rent they are also responsible for maintaining the land and improving it, so their work is increasing the value of the landlord’s asset.

The vassals cannot move to another supplier because there is no spare capacity, so, in effect the lords who own the land have a monopoly.

How do These Ideas Apply to Compute Hardware?

On the one hand, one may have markets in which there is an obvious monopoly where a single vendor effectively dominates the whole market. However, even when that is not the case, there is a significant danger that people writing software who intend to achieve the highest possible performance will, in effect, dig themselves into a fiefdom by writing code that cannot easily be ported to a different vendor’s machines, thus making it so costly to do that that it becomes effectively impossible to use any other vendor’s machines.

If we consider that in the CPU market, we can see a couple of obvious fiefdoms based on instruction set architecture:-

x86-64: where there are two suppliers, AMD® and Intel,® but it is possible to write code that is not portable even between them.

ARM®: where there are multiple hardware suppliers.

RISC-V®: where there are multiple suppliers, but many non-standard extensions that invite you into a fiefdom.

Realistically, as soon as you start writing code in a language that is not standardised and supported by multiple hardware vendors, you are making yourself into a vassal. That can be writing in assembler code, but also includes writing in higher-level, vendor-specific languages.

In addition, one can consider that anyone writing code for a specific instruction-set architecture (ISA) is acting as a vassal to the controller of that architecture, since they are making machines based on that architecture more attractive. Thus, historically, people writing applications for classic x86, Windows based, PCs were behaving as vassals to both Microsoft® and the x86 architecture hardware vendors.

How Can One Escape?

The most obvious way is only to write code that is portable between vendors. However, that is hard, since one of the pressures on software is to perform as well as possible on the current machine, which may require the use of vendor specific code, taking us back to being a vassal.

Standards

Software standards are critical in providing at least a partial route to portable code. For example, no vendor controls the C, C++ or Fortran language standards, and these languages are all required for serious HPC machines used for science applications3.

Whether hardware vendors like standards depends on their market share. Newcomers to a market like them, as they make it easier for customers to move their codes to the new machines, whereas monopolistic, deeply embedded, vendors are not so keen, as they may allow their vassals to escape.

The genesis-history of the Message Passing Interface (MPI) shows some of this. As Wikipedia explains, it came from a research/customer side, rather than from vendors. In the story I have heard, an important group of customers (US National Labs) approached the vendors, and had a dialogue along these lines:-

Customers: We’re going to create a message passing standard so that we can port our codes between different vendor’s machines, and would like you to be involved.

Vendors: That’s interesting, let us know when you’re done, and we’ll take a look.

Customers: And, just so you know, all of our next procurements will require full support of the standard.

Vendors: Ah, that’s different, how can we get involved?

The vendors’ position is hugely changed by the customers use of their monopsonistic power as a group. Instead of being something that has no value to the vendor, and is no threat, the standard becomes something dangerous that could be used by their competitors to lock them out of the market. They therefore need to be involved to ensure that the standard is implementable on their hardware, and that they know how to implement it.

As HPC folk know, MPI has become an extremely successful standard which was 30 years old in 2024, and has reached version 4.1. (A longevity which those of us in the room at the Bristol Suites in Dallas when we were specifying MPI-1 did not expect!)

What Does the GPU Market Look Like?

At first glance it seems to be a simple, almost perfect, monopoly. According to recent market share statistics NVidia has around 90% of the market (e.g. see Nvidia's GPU market share hits 90% in Q4 2024 (gets closer to full monopoly)), which is well above the 25% share which is deemed a monopoly here in the UK.

However, one can also consider it a fiefdom since many (probably most) codes written for NVidia GPUs use CUDA®, which is not a portable standard, but rather an NVidia defined language (even if other vendors have tried to invest in tools to make it possible to translate it into more vendor-neutral languages, or in compilers to allow CUDA to run on their hardware4).

Below CUDA, if one wants to tie oneself even more to NVidia, is PTX, which is effectively an assembler language, so even more hardware specific.

Since the people who write in these languages are making it harder for them to move to another vendor, by doing so they become NVidia vassals.

Why Did DeepSeek Crash the Market?

What Would We Expect?

Since the market in NVidia stock crashed on news of DeepSeek releasing its code, one might expect that DeepSeek had demonstrated a high-performance AI implementation running on non-NVidia hardware, or, at least, written one in a portable language that would make such a transition easier.

What Does DeepSeek Actually Do?

Since the DeepSeek code is feely available on GitHub, we can look for ourselves.

Languages

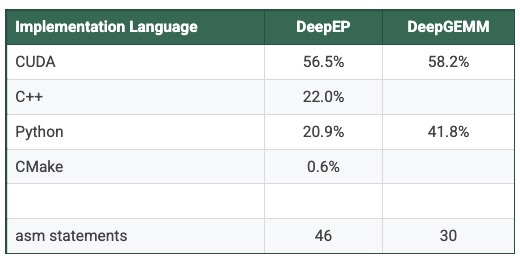

If we look at two critical libraries, we see this proportion of languages (as reported by GitHub, and counting asm statements using find, grep, and wc) :-

The first thing to note is that most of the code is in CUDA, so running any of it on non-NVidia machines will be hard, and our first explanation of the market reaction cannot be correct; this is not code which is trivially portable to non-NVidia machines.

The second thing to note is that these critical parts of the code are also using assembly code (PTX) accessed via asm statements from the CUDA code. Although the number of asm statements seems small, each of them is exposing a single instruction as an inlined function that can then be called. So we are not seeing the number of assembly instructions generated, but rather the number of different assembly instructions which are being used.

Code Analysis Conclusion

The DeepSeek code is not a way to escape from being an NVidia vassal, but digs one even deeper into NVidia dependence.

So, What Did the Market Dislike?

There are a couple of possibilities…

1) Software is Perfect

Perhaps the markets focus too much on vendor presentations which tend to emphasise peak hardware performance, and therefore believe that that is the performance achieved by existing codes which must, therefore, already be perfect. If that were the case, solving larger problems would indeed require using more hardware.

If so the investors need to be talking more to software developers who spend a lot of time trying to improve the performance of our codes, and having to work around “features” of the vendor’s software tools. Of course, since we are talking about presentations from hardware companies there’s the continuous background of “It’s only software”, or “That’s just a SMOP (Simple Matter Of Programming)”, which resides deep in the heart of hardware engineers and which may help to mislead investors.

2) Improving Code Performance is Bad

Alternatively the market disliked the fact that this, heavily NVidia dependent code, is running faster, and therefore allowing people to use less GPU-time (or, equivalently, since this is a hugely parallel problem), fewer GPUs.

So the threat is not that the code allows people to move away from NVidia, but that it reduces GPU demand.

Or, in other words, the code has been tuned too much and runs too well!

If this is a correct, it’s a rather frightening conclusion, since it suggests that the stock-market would prefer that NVidia did not improve its software environment, or help people to get their codes to run well.

Notes

All of this is personal speculation.

Although NVidia features heavily here, this is not intended as criticism of NVidia, but rather of the stock-market and investor opinion. This example leapt out at me because of my background, but I am sure similar conclusions could be drawn from other examples.

Thanks to Adrian Jackson for his comments.

I do not cover all market types, as some (such as a monopsony), do not seem relevant, though at the very high end of the market one could certainly argue that there are only a few organisations buying the most powerful machines…

See Is Fortran a Dead Language if you think Fortran is not important. Also consider the headline on the March 2025 TIOBE index: “The Dinosaurs Strike Back”, with Fortran at number 11 in the ranking. (Internet archive copy here.)

It is not entirely clear to me whether that market share is for only for data-centre GPUs, or for the whole discreet GPU market (so including desktop machines), however, it is clear that NVidia is hugely dominant either way!

I am not the only one who thinks software optimisation is what the market fears. See this semianalysis post(https://semianalysis.com/2025/03/19/nvidia-gtc-2025-built-for-reasoning-vera-rubin-kyber-cpo-dynamo-inference-jensen-math-feynman/), which says "The concern is that DeepSeek-style software optimization and increasing Nvidia-driven hardware improvements are leading to too much savings, meaning that the demand for AI hardware decreases and the market will be in a token glut. "

It was pretty obvious that you didn't need massive proprietary hardware to run LLMs.

It was only a matter of time before the markets realised this.

Likely another two orders of magnitude left in the bag from number encodings and dropping the use of dense matrices. Not something you can do with gemm.

I did wish for a while that I had taken a job offer at Nvidia as the hype curve would have been good for my bank balance!

I always like Jim's posts. He has amazing experience.