Atomics in AArch64

Better get those compiler flags right!

Introduction

In this post we’ll look at the performance of a simple atomic operation on a couple of Arm® AArch64 machines. In particular we’ll show the improvement that comes from using the simple, single-instruction, atomics in the Arm V8.1a architecture in preference to the more general Load-Locked, Store-Conditional (LL-SC) implementation in the earlier architectures. The improved performance of the newer architecture was mentioned in a tweet, so as I already had a benchmark for this for “The Book”, re-running those benchmarks and writing this up seemed worthwhile.

The Problem

Atomics

In a parallel program there are occasions when different threads need to update shared state in a safe way. At a high level that can be achieved using locks and critical sections. However, that just pushes the problem down a level since the locks themselves must be implemented. That leads us (and hardware architects!) to realise that the hardware must provide instructions which can guarantee that an update to a location can be made without interference from another logicalCPU1 sharing the same address-space.2

These are indivisible, atomic, operations.

Complex Instruction Set Computer (CISC)

In a CISC implementation, adding multiple atomic operations is conceptually simple, since CISC architectures (such as X86 and X86_64) already include instructions which perform a read-modify-write (RMW) operation on memory. Therefore it is enough to add instructions like this which guarantee that the memory in question is not updated by another logicalCPU between the read and the write. In the X86 and X86_64 architectures this is achieved by adding a “lock” prefix3 to such an instruction, as we can see by looking at the code generated for a trivial example like this :-

#include <atomic>

void aincr(std::atomic<int> * value){

(*value)++;

}When compiled by clang++ 11.0.1 for X86_64 with -O3 this generates

aincr(std::atomic<int>*): # @aincr(std::atomic<int>*)

lock add dword ptr [rdi], 1

retHere you can see the single atomic operation with the lock prefix.

Reduced Instruction Set Computer (RISC)

In a RISC implementation things are architecturally more complicated, since part of the RISC philosophy is to have arithmetic instructions only update registers, and never to have an RMW operation on memory. There may therefore be no easy way to encode both a memory operand, an operation (other than load/store), and a second operand.

The classic solution to this problem is to provide a more general pair of operations, a “Load Locked” (or “Load Linked”) (LL) operation and a “Store Conditional” (SC) operation. The LL loads the value from memory, but also remembers the address somewhere deep in the processor4; the SC conditionally stores the new value as long as no other core has interfered with the memory in the time between the LL and the SC.

The details of how large a region of memory is monitored, and which instructions are allowed after the LL but before the SC are architecture and implementation dependent. (On the DEC Alpha AXP, which, I believe, introduced these ideas, the monitored region is at least 16B, but may be up to a whole page, and many instructions (such as taken branches) may be forbidden [or not…]). You can find the details for specific architectures in their manuals. Similarly, what is required to ensure forward progress of the whole program (i.e. to ensure that competing LL/SC operations do not continually shoot each other down so that no logical-CPU makes forward progress) is architecture dependent. However, luckily for us, all of this should be handled for us if we C++ std::atomic to access these operations!

AArch64

Since the ‘R’ in Arm originally stood for “RISC”, its historical approach has, unsurprisingly, been an LL-SC one, with the ldaxr instruction for the load and the stlxr instruction for the conditional store.

We can see those instructions being generated if we compile the same code for AArch64 with no specific architecture specified. Here clang++ 11.0.1 generates

aincr(std::atomic<int>*): // @aincr(std::atomic<int>*)

.LBB0_1: // =>This Inner Loop Header: Depth=1

ldaxr w8, [x0]

add w8, w8, #1 // =1

stlxr w9, w8, [x0]

cbnz w9, .LBB0_1

retYou can see the ldaxr (LL), the stlxr (SC) and the retry test (cbnz .LLB0_1) which is necessary to handle the case where the stlxr (SC) operation fails because some other logicalCPU operated on the relevant memory after we loaded it with the ldaxr but before we performed our store, in which case this logicalCPU must try again from the beginning.

In their great wisdom, the Arm architects realised that code like this has lower performance than can be achieved by a single instruction atomic. This is not because one instruction is always faster than three or four, but because the additional information available to the single instruction allows it to use a different implementation which is faster and thus reduces the exclusive time during which no other logicalCPU can access the relevant data. Therefore in the v8.1-a version of the architecture they added some single instruction atomic operations.

If we compile our sample code telling the compiler that it can use those (by passing the -march=armv8.1-a flag), we get this code :-

aincr(std::atomic<int>*): // @aincr(std::atomic<int>*)

mov w8, #1

ldaddal w8, w8, [x0]

retYou can see that the atomic operation is now a single instruction (ldaddal), and that it is guaranteed to succeed; there is no loop here to handle interference causing failure.

So, What *Is* the Performance Difference?

To see the most extreme difference, we use a micro-benchmark which is doing atomic operations as fast as it can in a loop.

#define InnerReps 1000

static void doIntegerIncrement(void * t) {

std::atomic<uint32_t> * target = (std::atomic<uint32_t> *)t;

for (int i = 0; i < InnerReps; i++)

(*target)++;

}This whole function can then be timed and we can deduce the time per operation. We can then compare those times as we increase the number of cores in use.

Of course, we hope that real codes are not performing as many atomics as this, so this is the extreme case.

How to Present the Results?

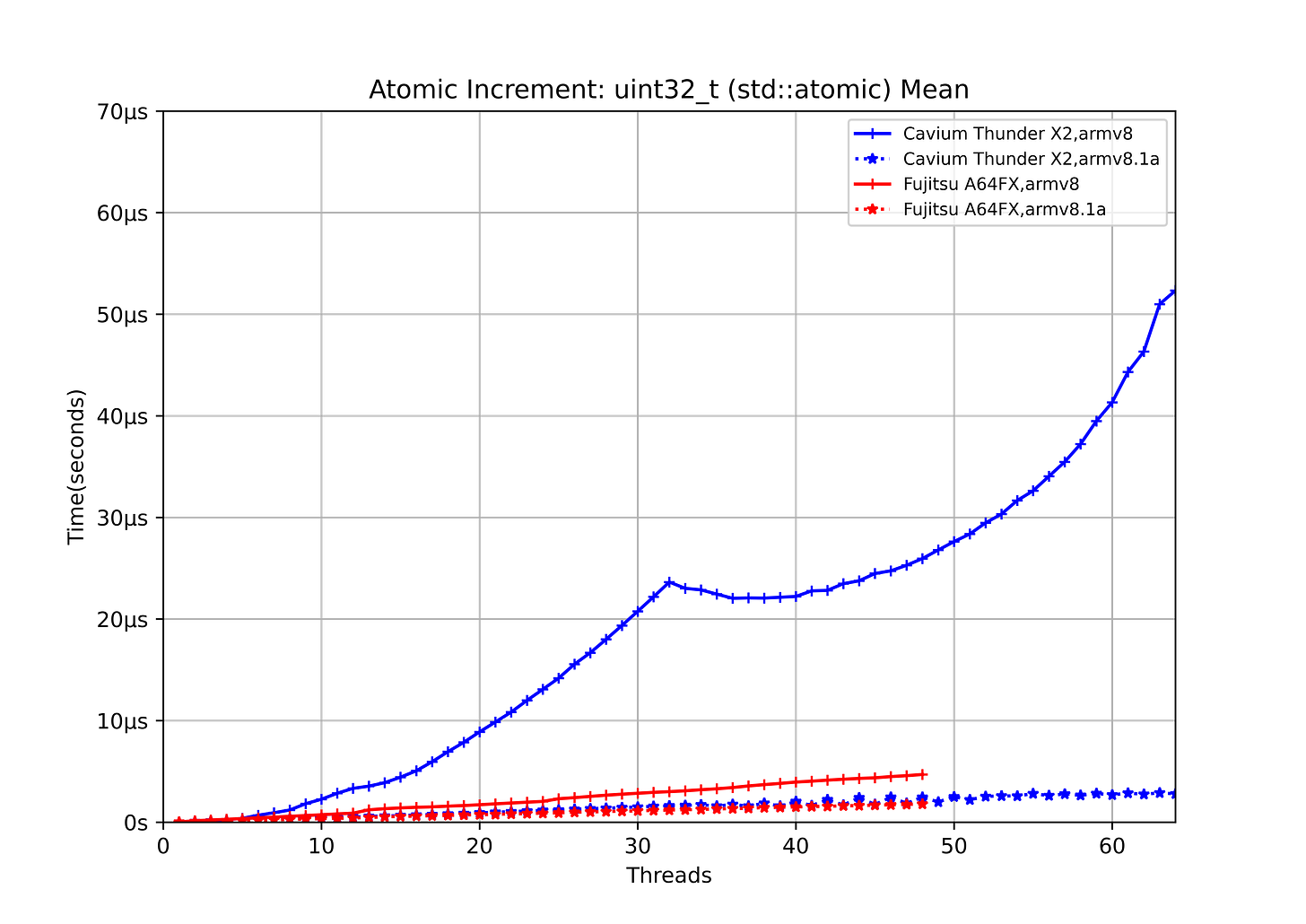

The most obvious way is to show the time each operation takes. That gives us a graph like this :-

That looks very frightening; can it really take >50µs for a single integer increment? This TX2 is a (nominally) 2.1GHz machine, so that’s >105,000 cycles for one simple operation!

Well, yes, it can, but if we think about this benchmark a little more, it’s clear that the time of each operation has to increase at least linearly as we add threads, since the total machine throughput for updating a single location is fixed, but we’re dividing that throughput over each thread. A much better way to display this data is therefore to show the total machine throughput (so now bigger is better). This allows us to see what is happening with few cores while still seeing the overall picture. The best we can hope to see then is that the throughput remains constant as we add cores.

Plotted like that, our data looks like this :-

Here we can see that a single core can perform these increments much faster than more than one. That should be no surprise, since in that case the variable being incremented can sit in the L1$, and there is no data movement. We can also see, though, that even in that case the single atomic instruction is significantly faster than the LL-SC scheme (~1.3x faster on the TX2, and ~1.95x on the A64FX)

To look in more detail at the scaling we can plot the same data excluding the single core case.

TX2

We can see that the performance with the LL-SC implementation drops off rapidly up to around 16 cores but is then reasonably level, with even a slight improvement as we move into the second socket. With the single atomic implementation performance increases when we enter the second socket and there’s some weird odd/even jumping about going on. At up to 3 cores the LL-SC outperforms the single atomic, but above that (and overall) it is significantly slower.

A64FX

The A64FX is more predictable, but again we can see that there are some shared structures between chunks of 12 cores which introduce visible performance changes. Here the single-atomic instruction wins at all core counts.

Another Option

If you don’t know precisely which architecture your code will be running on, then it is better to have the compiler generate a call into a runtime library which will use the best atomics available on the machine executing the code. That can be achieved by using the -moutline-atomics flag which is available in GCC 105 and CLANG 12.

With that flag our sample code looks like this when compiled by GCC 10.3:-

aincr(std::atomic<int>*):

stp x29, x30, [sp, -16]!

mov x1, x0

mov w0, 1

mov x29, sp

bl __aarch64_ldadd4_acq_rel

ldp x29, x30, [sp], 16

retThere is obviously some additional overhead here, but that is outside the critical time, so is unlikely to affect the throughput much.

Conclusions

On both the machines we have looked at (TX2, A64FX) using the single instruction atomics is a big win (~4.8x throughput improvement in the mean over all core counts on the TX2, and ~2.9x on the A64FX).

If atomics matter at all in the code you are running on AArch64, you need to ensure that it, and any parallel libraries it uses, have been compiled with the

-march=armv8.1a(or later architecture) flag, or, for code which may run on either level of the architecture use the-moutline-atomicsflag.CISC architectures sometimes have advantages, and even RISCy architectures have evolved to include CISCy features6.

Boilerplate

In the experiments here we are running one thread/core and tightly binding the threads to a single logicalCPU. (Since the A64FX has no SMT, there is only one logicalCPU/core there, but the TX2 has 4SMT threads/core).

All of the code for these benchmarks is in the Little OpenMP (LOMP) runtime microBM directory in atomics.cc. (Some of the most recent tweaks to the timing code and automatic detection of specific Aarch64 implementations may not have made into the “main” branch yet; if there’s still a “TimerFixes” branch, that’s the one you want. If that branch no longer exists, that means those changes have been accepted and moved into “main”).

Machine and compiler configurations used are the same as in the Processing a File with OpenMP blog.

As usual thanks are due to the Compiler Explorer team for making it so easy to extract assembler samples.

A “logicalCPU” is the Linux terminology for the single entity in the hardware that can execute code. I.e. the thing that has a set of registers including a program-counter. That might be a full core, or, if there is symmetric multi-threading (SMT), a single SMT thread. Whatever the hardware implementation, a logicalCPU is the hardware entity that the OS kernel has to schedule work onto.

Yes, you can manage mutual exclusion without atomic hardware operations (e.g. by using Dekker’s Algorithm), but that is realistically only of historical academic interest now, and is somewhat off-piste for this blog which is about the performance of atomic operations, not about justifying their existence!

In the original implementation the lock prefix asserted a bus-lock which prevented other sockets and cores (there was only one core/socket back then) from accessing memory during the instruction execution. Of course the current implementation is rather different!

Agreed, it could be looking at the state of the cache-line rather than having additional state in the core, and you may not consider cache-line state to be part of the processor.

See the Arm blog Making the most of the Arm architecture with GCC 10 for more discussion of the -moutline-atomics flag.

This should hardly be a surprise, given the implementation opportunities that have opened up in the approaching 40 years since RISC architectures were initially proposed!

In addition to sheer performance, we might add that algorithms that are supposed to be "wait free" can't really be said to be wait free if the atomic operations they rely on are implemented with a loop under the hood. So, on Armv8 they aren't really wait free, on Armv8.1 they are.

Where ARM LDX/STX really shine is in more complex operations. You can do pretty-much-arbitrary operations on up to 128b of data, atomically and locklessly, without suffering from the ABA problem, and with hardware forward progress guarantees.

This is _great_ for things like MPMC queues. Much of the classical complexity gets dropped and you end up with surprisingly readable (and performant) code.

(Ditto for atomic updates of things like status words and such. Expressing things like "if field A, increment field B, if overflow set flag C" (anonymized from an actual usecase) is simple with LDX/STX but between difficult and impossible in classical atomics (depending on how much you care about forward progress and ABA issues))

Unfortunately this was DOA the moment ARM recommended using intrinsics for these operations. You can't access the full power of LDX/STX using intrinsics, mainly due to the memory access requirements (you can't access other memory between the LDX/STX, which compilers won't guarantee), which means that you end up having to rewrite operations into load / CAS loops... which drops all of the niceities of LDX/STX on the floor (no forward progress guarantees, ABA pops up as an issue again, etc, etc) and ends up with them being worse than classical atomics.

Still great if you don't mind writing (extended) asm though. Especially in applications where you expect the atomics to be lightly loaded (and hence throughput more important than time in the critical section) (e.g. doing atomic updates to semi-random locations in a 100MB array)